September 28, 2017

Why Man-in-the-Middle Detection is Overrated

Last week, Nick Sullivan launched mitm.watch, a website that purports to tell you whether or not your HTTPS connection is being intercepted by a man-in-the-middle (MitM). mitm.watch uses Caddy's HTTPS MitM Detection Feature, which implements the techniques described in this paper. Basically, Caddy compares the browser name and version number advertised by the User-Agent header to the properties of the TLS handshake initiated by the client (e.g. ciphersuites). If the TLS handshake doesn't match the known properties of the purported browser, then the TLS handshake was probably not initiated by the browser, but by a man-in-the-middle. Caddy's documentation suggests that you could display an error message if a MitM is detected. mitm.watch displays either a green "No MITM!" page, or a red "Likely MITM!" page.

Unfortunately, there is a significant and intractable shortcoming to MitM detection: a MitM can defeat the detection by making its TLS implementation work exactly like that of the browser it's proxying, or at least similar enough that the differences are not observable by the server. You should assume a malicious MitM (one designed to steal data) will conceal itself this way. And if websites start displaying errors when a MitM is detected, you should expect the makers of commercial TLS interception devices (e.g. Bluecoat) to respond by making their interception devices indistinguishable from browsers.

We need to stop obsessing over MitM detection. In addition to server-side MitM detection, another recurring idea is to apply HTTP Public Key Pinning (HPKP) to certificates issued by private certificate authorities (e.g. those used by MitM devices), or to display a special icon in the browser when an HTTPS connection uses a private certificate authority. These proposals are barking up the wrong tree. Short of protocol or implementation vulnerabilities, there are only two ways a TLS connection can be intercepted without the consent of the server operator: one, an unauthorized certificate is issued by a publicly-trusted certificate authority, or two, a private certificate authority has been added to the client's trust store. (I assume the website is using HSTS, which prevents certificate errors from being bypassed.) For the first case, we have Certificate Transparency, which is better than even pie-in-the-sky MitM detection, since it detects rogue certificates even if they are never used. And the second case can only happen if the client's trust store is modified. At that point, the client's security should be considered compromised, as the ability to modify the trust store typically implies the ability to do much worse, such as install spyware that monitors and exfiltrates everything you do, without so much as touching a TLS connection. It's pointless to try to ensure end-to-end encryption when the security of an endpoint is in doubt.

That said, there is one potential benefit to MitM detection. Despite claiming to improve security, many commercial TLS interception devices actually harm security by using TLS client implementations that are vastly inferior to those of modern browsers. For instance, they use old, insecure ciphers, or even neglect to validate the certificate. If the makers of commercial TLS interception devices are forced to emulate the TLS implementations of browsers to avoid detection, they may end up improving their security in the process. However, if this is the goal, MitM detection is rather superfluous: servers might as well just check for insecure attributes of the connection and raise an error if found, MitM or not. After all, MitMs are not the only perpetrators of poor TLS security; there are plenty of old and insecure browsers out there as well. Jeff Hodges' How's My SSL, which recently launched a subscription service that lets you use it on your own site, is one example of this approach.

January 24, 2017

Thoughts on the Systemd Root Exploit

Sebastian Krahmer of the SUSE Security Team has discovered a local root exploit in systemd v228. A local user on a system running systemd v228 can escalate to root privileges. That's bad.

At a high level, the exploit is trivial:

- Systemd uses -1 to represent an invalid

mode_t(filesystem permissions) value. - Systemd was accidentally passing this value to

openwhen creating a new file, resulting in a file with all permission bits set: that is, world-writable, world-executable, and setuid-root. - The attacker writes an arbitrary program to this file, which succeeds because it's world-writable.

- The attacker executes this file, which succeeds because it's world-executable.

- The attacker-supplied program runs as root, because the file is setuid-root.

In mitigation: The vulnerability was fixed a year ago and less than three months after it was introduced. It is present only in v228.

In aggravation: The vulnerability was mislabeled at the time as a local denial-of-service and the systemd team did not request a CVE-ID for it. Had they requested a CVE-ID, someone may have noticed that this was more than a DoS. (Krahmer accurately points out that the systemd commit log is "really huge," which makes it hard to spot security-relevant commits.)

In mitigation: The vulnerability depends on a yet-unfixed hole in how Linux clears a file's setuid and setgid bits when writing to it. Systemd merely creates an empty setuid-root file. Gaining root requires writing to this file, and when a non-root user writes to a setuid-root file, the setuid bit is supposed to be cleared. halfdog found a clever way to circumvent this by tricking a root process into writing to the file instead. This is an extremely interesting vulnerability in itself and I can't wait to dive deeper into it.

In aggravation: The vulnerability would have been prevented if systemd used a fail-safe umask rather than setting it to 0, something I called out last September as evidence of systemd's poor security hygiene. A more sensible umask, such as 022, would have caused open to create the setuid-root file without world-writable permissions, preventing exploitation. However, systemd maintainer David Strauss rejected a safe umask with a completely illogical argument that shows his cluelessness over how systemd uses umask.

Lastly, this is yet another example of "The Billion Dollar Mistake": systemd was using a magic value (-1) to represent an invalid mode_t value, and C's type system did not prevent passing it to the mode argument of open. A language with a better type system, such as Rust or C++ (which has std::optional) can help prevent this kind of error.

That said, this is not about programming languages. Dovecot (among a handful of others) has demonstrated that adherence to good coding practices can produce secure software written in C. Rewriting systemd in a safer language would not transform it into quality software, although certain classes of bugs would likely be reduced or eliminated.

Rather, this is about lock-in. Systemd is introducing unprecedented lock-in to the Linux userspace. They are replacing previously-independent userspace services with ones whose development is controlled by the systemd project and which only work if systemd is PID 1. They are defining their own non-standard protocols and encouraging applications to use them. They have even replaced DNS with a dbus-based protocol, which they "strongly recommend" applications use instead of DNS. Sadly, the most recent version of Ubuntu ships with this travesty.

Systemd's developers have repeatedly demonstrated their poor judgment and unfitness to hold such responsibility. Unfortunately, the lock-in they're creating will deprive people of the ability to vote with their feet and switch to better alternatives.

October 2, 2016

Systemd is not Magic Security Dust

Systemd maintainer David Strauss has published a response to my blog post about systemd. The first part of his post is replete with ad hominem fallacies, strawmen, and factual errors. Ironically, in the same breath that he attacks me for not understanding the issues around threads and umasks, he betrays an ignorance of how the very project which he works on uses threads and umasks. This doesn't deserve a response beyond what I've called out on Twitter.

In the second part of his blog post, Strauss argues that systemd improves security by making it easy to apply hardening techniques to the network services which he calls the "keepers of data attackers want." According to Strauss, I'm "fighting one of the most powerful tools we have to harden the front lines against the real attacks we see every day." Although systemd does make it easy to restrict the privileges of services, Strauss vastly overstates the value of these features.

The best systemd can offer is whole application sandboxing. You can start a daemon as a non-root user, in a restricted filesystem namespace, with mandatory access control. Sandboxing an entire application is an effective way to run potentially malicious code, since it protects other applications from the malicious one. This makes sandboxing useful on smartphones, which need to run many different untrustworthy, single-user applications. However, since sandboxing a whole application cannot protect one part of the application from a compromise of a different part, it is ineffective at securing benign-but-insecure software, which is the problem faced on servers. Server applications need to service requests from many different users. If one user is malicious and exploits a vulnerability in the application, whole application sandboxing doesn't protect the other users of the service.

For concrete examples, let's consider Apache and Samba, two daemons which Strauss says would benefit from systemd's features.

First Apache. You can start Apache as a non-root user provided someone else binds to ports 443 and 80. You can further sandbox it by preventing it from accessing parts of the filesystem it doesn't need to access. However, no matter how much you try to sandbox Apache, a typical setup is going to need a broad amount of access to do its job, including read permission to your entire website (including password-protected parts) and access to any credential (database password, API key, etc.) used by your CGI, PHP, or similar webapps.

Even under systemd's most restrictive sandboxing, an attacker who gains remote code execution in Apache would be able to read your entire website, alter responses to your visitors, steal your HTTPS private keys, and gain access to your database and any API consumed by your webapps. For most people, this would be the worst possible compromise, and systemd can do nothing to stop it. Systemd's sandboxing would prevent the attacker from gaining access to the rest of your system (absent a vulnerability in the kernel or systemd), but in today's world of single-purpose VMs and containers, that protection is increasingly irrelevant. The attacker probably only wants your database anyways.

To provide a meaningful improvement to security without rewriting in a memory-safe language, Apache would need to implement proper privilege separation. Privilege separation means using multiple processes internally, each running with different privileges and responsible for different tasks, so that a compromise while performing one task can't lead to the compromise of the rest of the application. For instance, the process that accepts HTTP connections could pass the request to a sandboxed process for parsing, and then pass the parsed request along to yet another process which is responsible for serving files and executing webapps. Privilege separation has been used effectively by OpenSSH, Postfix, qmail, Dovecot, and over a dozen daemons in OpenBSD. (Plus a couple of my own: titus and rdiscd.) However, privilege separation requires careful design to determine where to draw the privilege boundaries and how to interface between them. It's not something which an external tool such as systemd can provide. (Note: Apache already implements privilege separation that allows it to process requests as a non-root user, but it is too coarse-grained to stop the attacks described here.)

Next Samba, which is a curious choice of example by Strauss. Having configured Samba and professionally administered Windows networks, I know that Samba cannot run without full root privilege. The reason why Samba needs privilege is not because it binds to privileged ports, but because, as a file server, it needs the ability to assume the identity of any user so it can read and write that user's files. One could imagine a different design of Samba in which all files are owned by the same unprivileged user, and Samba maintains a database to track the real ownership of each file. This would allow Samba to run without privilege, but it wouldn't necessarily be more secure than the current design, since it would mean that a post-authentication vulnerability would yield access to everyone's files, not just those of the authenticated user. (Note: I'm not sure if Samba is able to contain a post-authentication vulnerability, but it theoretically could. It absolutely could not if it ran as a single user under systemd's sandboxing.)

Other daemons are similar. A mail server needs access to all users' mailboxes. If the mail server is written in C, and doesn't use privilege separation, sandboxing it with systemd won't stop an attacker with remote code execution from reading every user's mailbox. I could continue with other daemons, but I think I've made my point: systemd is not magic pixie dust that can be sprinkled on insecure server applications to make them secure. For protecting the "data attackers want," systemd is far from a "powerful" tool. I wouldn't be opposed to using a library or standalone tool to sandbox daemons as a last line of defense, but the amount of security it provides is not worth the baggage of running systemd as PID 1.

Achieving meaningful improvement in software security won't be as easy as adding a few lines to a systemd config file. It will require new approaches, new tools, new languages. Jon Evans sums it up eloquently:

... as an industry, let's at least set a trajectory. Let's move towards writing system code in better languages, first of all -- this should improve security and speed. Let's move towards formal specifications and verification of mission-critical code.

Systemd is not part of this trajectory. Systemd is more of the same old, same old, but with vastly more code and complexity, an illusion of security features, and, most troubling, lock-in. (Strauss dismisses my lock-in concerns by dishonestly claiming that applications aren't encouraged to use their non-standard DBUS API for DNS resolution. Systemd's own documentation says "Usage of this API is generally recommended to clients." And while systemd doesn't preclude alternative implementations, systemd's specifications are not developed through a vendor-neutral process like the IETF, so there is no guarantee that other implementers would have an equal seat at the table.) I have faith that the Linux ecosystem can correct its trajectory. Let's start now, and stop following systemd down the primrose path.

September 28, 2016

How to Crash Systemd in One Tweet

The following command, when run as any user, will crash systemd:

NOTIFY_SOCKET=/run/systemd/notify systemd-notify ""

After running this command, PID 1 is hung in the pause system call.

You can no longer start and stop daemons. inetd-style services no longer

accept connections. You cannot cleanly reboot the system. The system

feels generally unstable (e.g. ssh and su hang for 30 seconds since

systemd is now integrated with the login system). All of this can be

caused by a command that's short enough to fit in a Tweet.

Edit (2016-09-28 21:34): Some people can only reproduce if they wrap the command in a while true loop. Yay non-determinism!

The bug is remarkably banal. The above systemd-notify command sends a

zero-length message to the world-accessible UNIX domain socket located

at /run/systemd/notify. PID 1 receives the message and

fails

an assertion that the message length is greater than zero.

Despite the banality, the bug is serious, as it allows any local user

to trivially perform a denial-of-service attack against a critical

system component.

The immediate question raised by this bug is what kind of quality assurance process would allow such a simple bug to exist for over two years (it was introduced in systemd 209). Isn't the empty string an obvious test case? One would hope that PID 1, the most important userspace process, would have better quality assurance than this. Unfortunately, it seems that crashes of PID 1 are not unusual, as a quick glance through the systemd commit log reveals commit messages such as:

- coredump: turn off coredump collection only when PID 1 crashes, not when journald crashes

- coredump: make sure to handle crashes of PID 1 and journald special

- coredump: turn off coredump collection entirely after journald or PID 1 crashed

Systemd's problems run far deeper than this one bug. Systemd is defective by design. Writing bug-free software is extremely difficult. Even good programmers would inevitably introduce bugs into a project of the scale and complexity of systemd. However, good programmers recognize the difficulty of writing bug-free software and understand the importance of designing software in a way that minimizes the likelihood of bugs or at least reduces their impact. The systemd developers understand none of this, opting to cram an enormous amount of unnecessary complexity into PID 1, which runs as root and is written in a memory-unsafe language.

Some degree of complexity is to be expected, as systemd provides a number of useful and compelling features (although they did not invent them; they were just the first to aggressively market them). Whether or not systemd has made the right trade-off between features and complexity is a matter of debate. What is not debatable is that systemd's complexity does not belong in PID 1. As Rich Felker explained, the only job of PID 1 is to execute the real init system and reap zombies. Furthermore, the real init system, even when running as a non-PID 1 process, should be structured in a modular way such that a failure in one of the riskier components does not bring down the more critical components. For instance, a failure in the daemon management code should not prevent the system from being cleanly rebooted.

In particular, any code that accepts messages from untrustworthy sources like systemd-notify should run in a dedicated process as a unprivileged user. The unprivileged process parses and validates messages before passing them along to the privileged process. This is called privilege separation and has been a best practice in security-aware software for over a decade. Systemd, by contrast, does text parsing on messages from untrusted sources, in C, running as root in PID 1. If you think systemd doesn't need privilege separation because it only parses messages from local users, keep in mind that in the Internet era, local attacks tend to acquire remote vectors. Consider Shellshock, or the presentation at this year's systemd conference which is titled "Talking to systemd from a Web Browser."

Systemd's "we don't make mistakes" attitude towards security can

be seen in other places, such as

this code from the main() function of PID 1:

/* Disable the umask logic */ if (getpid() == 1) umask(0);

Setting a umask of 0 means that, by default, any file created by systemd

will be world-readable and -writable. Systemd defines a macro called RUN_WITH_UMASK

which is used to temporarily set a more restrictive umask when systemd needs to create a file

with different permissions. This is backwards. The default umask should be restrictive,

so forgetting to change the umask when creating a file would result in a file

that obviously doesn't work. This is called fail-safe design. Instead systemd is fail-open, so forgetting to change the umask

(which

has already happened twice) creates a file that works but is a potential security vulnerability.

The Linux ecosystem has fallen behind other operating systems in writing secure and robust software. While Microsoft was hardening Windows and Apple was developing iOS, open source software became complacent. However, I see improvement on the horizon. Heartbleed and Shellshock were wake-up calls that have led to increased scrutiny of open source software. Go and Rust are compelling, safe languages for writing the type of systems software that has traditionally been written in C. Systemd is dangerous not only because it is introducing hundreds of thousands of lines of complex C code without any regard to longstanding security practices like privilege separation or fail-safe design, but because it is setting itself up to be irreplaceable. Systemd is far more than an init system: it is becoming a secondary operating system kernel, providing a log server, a device manager, a container manager, a login manager, a DHCP client, a DNS resolver, and an NTP client. These services are largely interdependent and provide non-standard interfaces for other applications to use. This makes any one component of systemd hard to replace, which will prevent more secure alternatives from gaining adoption in the future.

Consider systemd's DNS resolver. DNS is a complicated, security-sensitive protocol. In August 2014, Lennart Poettering declared that "systemd-resolved is now a pretty complete caching DNS and LLMNR stub resolver." In reality, systemd-resolved failed to implement any of the documented best practices to protect against DNS cache poisoning. It was vulnerable to Dan Kaminsky's cache poisoning attack which was fixed in every other DNS server during a massive coordinated response in 2008 (and which had been fixed in djbdns in 1999). Although systemd doesn't force you to use systemd-resolved, it exposes a non-standard interface over DBUS which they encourage applications to use instead of the standard DNS protocol over port 53. If applications follow this recommendation, it will become impossible to replace systemd-resolved with a more secure DNS resolver, unless that DNS resolver opts to emulate systemd's non-standard DBUS API.

It is not too late to stop this. Although almost every Linux distribution now uses systemd for their init system, init was a soft target for systemd because the systems they replaced were so bad. That's not true for the other services which systemd is trying to replace such as network management, DNS, and NTP. Systemd offers very few compelling features over existing implementations, but does carry a large amount of risk. If you're a system administrator, resist the replacement of existing services and hold out for replacements that are more secure. If you're an application developer, do not use systemd's non-standard interfaces. There will be better alternatives in the future that are more secure than what we have now. But adopting them will only be possible if systemd has not destroyed the modularity and standards-compliance that make innovation possible.

February 5, 2016

Domain Validation Vulnerability in Symantec Certificate Authority

Symantec was disregarding + and = characters

in email addresses when parsing WHOIS records, allowing certificate

misissuance for domains whose WHOIS contacts contained these characters.

The vulnerability has been reported and fixed. Read on for more...

There are three common ways for the requester of a domain-validated SSL certificate to prove control over the domain in the certificate request: add a record to the domain's DNS, publish a file on the domain's website, or respond to an email sent to an administrative address at the domain. The basic idea is that getting a DV certificate should require doing something that only the domain administrator can do. Adding a DNS record is the best way to ensure this: it's unlikely that anyone but the administrator could add records to a domain's DNS. Publishing a file on the domain's website is pretty good too, although some websites accept user uploads in a way that might be abused.

Email validation, on the other hand, is the worst way. Besides being impossible to automate, it's certainly the easiest to abuse. One problem is that the person receiving an email at an "administrative" address might not actually be an administrator. This is a big problem for email service providers who allow users to register arbitrary email addresses at their domains. In 2008, Mike Zusman was able to register the email address sslcertificates@live.com and use it to approve an SSL certificate for live.com. Back then, certificate authorities allowed you to choose from quite a large list of possible administrative addresses, which compounded the problem. Now, the Baseline Requirements (the rules governing public certificate issuance) define the administrative addresses as admin@, administrator@, hostmaster@, postmaster@, and webmaster@, which helps but is not a panacea: just last year, an unnamed Finn was able to register hostmaster@live.fi and use it to obtain a certificate for live.fi.

The Baseline Requirements also allow email addresses from the domain's WHOIS record to be used for certificate approval. These addresses don't suffer from the problem above: the WHOIS record is controlled by the domain's registrant, who wouldn't list an email address if it didn't belong to an administrator of the domain. Unfortunately, there's a pretty major implementation pitfall awaiting those who use WHOIS: WHOIS records are not machine-readable. They are unstructured, human-readable text. Even worse, every TLD uses its own format!

How does one take an unstructured, human-readable document, and extract

some bit of information such as an email address from it in realtime?

I suspect that most solutions are going to involve a regular

expression that matches a sequence of characters that look like an email address. What

constitutes a valid email address? The answer may surprise you. The relevant standard,

RFC 5322,

allows a shocking assortment of characters to appear in the local part (the part

to the left of the at sign) of an email address, including, but not limited to, +, =, !, #, {, `, and ^.

If you escape or quote the local part, you can even include control characters, although

the madness of permitting control characters is considered obsolete.

If a certificate authority does not properly consider the full range of characters when

parsing a WHOIS record, they risk extracting the wrong email address for a domain, allowing

an unauthorized party to obtain certificates for it. Last October, I discovered that Symantec's DV certificate

products (RapidSSL, QuickSSL) did not consider + and = characters when parsing WHOIS

records. If an email address in WHOIS contained either character, Symantec would treat

the part of the address following the character as a valid administrative address. For example,

if a domain's WHOIS contact was this:

andrew+whois@gmail.com

then Symantec would allow the following address to approve certificates for the domain:

whois@gmail.com

This was a serious flaw. + is commonly used in email addresses for

sub-addressing,

which permits person+anything@example.com to be used as an alias for

person@example.com. Several popular email service providers, including

Gmail, Outlook.com, and Fastmail support sub-addressing with the plus

character, and I know from my experience running SSLMate that it is not

uncommon for domain administrators to use an email address such as

person+whois@gmail.com for their WHOIS contact. An attacker could register

whois@gmail.com and fraudulently obtain certificates from Symantec for

any domain whose WHOIS contact followed this pattern.

As a proof of concept, I set all three WHOIS email addresses of a test domain, cloudpork.com, to alice+bob@cloudpork.com:

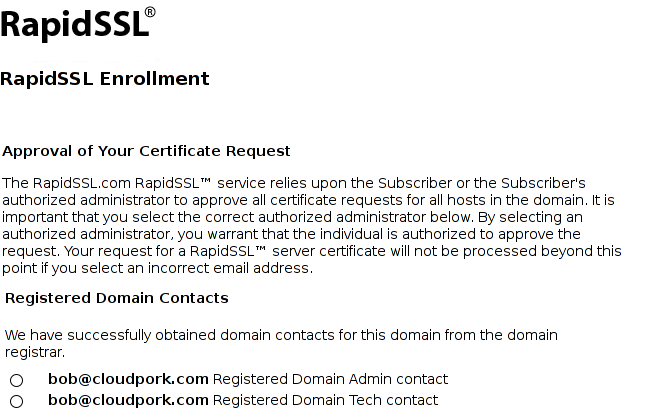

$ whois cloudpork.com | grep Email Registrant Email: ALICE+BOB@CLOUDPORK.COM Admin Email: ALICE+BOB@CLOUDPORK.COM Tech Email: ALICE+BOB@CLOUDPORK.COM Registrar Abuse Contact Email: abuse@enom.comI then went to RapidSSL to obtain a DV certificate for www.cloudpork.com, and was presented with the following choice of email addresses:

I selected bob@cloudpork.com, received the approval email at that address, and after approving the certificate was issued a valid SSL certificate for www.cloudpork.com, despite bob@cloudpork.com not being a valid administrative address for cloudpork.com.

I reported the vulnerability to Symantec on October 21, 2015. I also reported it to the four major trust store operators (Google, Mozilla, Microsoft, and Apple), which I believe is appropriate for vulnerabilities in publicly-trusted certificate authorities. Symantec reported that the issue was fixed on October 28, which I confirmed. Symantec then conducted a rather lengthy audit of previously-issued certificates to ensure that this vulnerability had not been exploited. The vulnerability was publicly disclosed yesterday.

In the end, I was able to confirm that Symantec properly handled +, =, -, _, and . characters in email addresses.

I wish I could have tested with additional non-alphanumeric characters, but I couldn't find a domain registrar who would let me

include such characters in WHOIS. Fortunately, the likelihood of someone

using a special character besides +, =, -, _, or . in their domain's WHOIS contact is pretty low.

Furthermore, email providers tend not to allow such bizarre characters in email addresses, so if someone

were to use such an email address, it would most likely be hosted at their own domain, where the risk

of the email being misdirected to an unauthorized person would be low.

I'm glad this vulnerability is fixed, but it serves as a reminder that the certificate authority system still has much room for improvement. I have high hopes for Certificate Transparency, a system which would require certificate authorities to log all certificates they issue to public, append-only, and auditable logs (browsers would enforce this by only accepting a certificate if it was accompanied by cryptographic proof of the certificate's inclusion in a log). Domain owners could monitor these logs for certificates related to their domain, so if a vulnerability such as this one were exploited to misissue certificates, it would be detected. The Certificate Transparency experiment is already underway: many certificates have been logged, and can be searched using the awesome crt.sh tool from Comodo. I'm working on some of my own tools to help domain owners use Certificate Transparency; stay tuned!